I know it when I see it.That sentence has been used to explain everything from art to pornography, and it applies equally well to high definition TV. HDTV is a really misunderstood term and really, in a lot of ways, it’s just something we all sort of agree on. There are standards, of course, but does that mean something that doesn’t meet those standards isn’t HDTV? Not at all. Let’s take a little deeper look at this issue. I’ll explain why you can pretty much say that almost anything is HDTV today and why 4K is just as confusing.

Standard definition

In order to understand HDTV, you need to first understand what HDTV is definitely not, and that’s SD. Standard definition is the term for analog TV that existed prior to the mid-2000s, the old-school TV you grew up with. In the US, that’s a standard for broadcasting 525 vertical lines of resolution, of which 480 are actually used for picture. (The others are used for things like closed captioning.) In other parts of the world that standard is 625 lines of which 576 are used for picture.

So technically anything with 481 lines of vertical resolution is high definition, at least in the US. But obviously that’s not going to feel very high.

There are technical standards for over-the-air HD which were supposed to make things a little easier to understand, but most HD is not actually pulled from off-air antennas and the technical standards for antennas make absolutely no sense for streaming.

What makes HD stand apart

Digital encoding

If you want to be super picky, HDTV doesn’t have to be digital. The first HDTV systems that were used in the 1980s (these were prototype systems, not available to the public.) The problem with analog HDTV is it requires a LOT of bandwidth, so that one broadcast channel would take the space of 6-7 standard definition broadcast channels. That really didn’t work for a lot of people, but technically you could have a system that is HD and is totally analog, even recording onto video tape.

Modern HDTV systems are digital so that they can be compressed. A digital HD signal fits in the same space as an old-school analog SD one because it’s been compressed to about 10% of its original size. Sophisticated math is required on both sides to make this happen.

Over-the-air broadcasts use the same compression schemes as DVDs. It was state of the art in the 1990s. However, most satellite and cable broadcasts use the MPEG-4 standard that was used for Blu-ray discs in the 2000s and 2010s. It’s far more efficient. Streaming systems use even more advanced technology like H.265 so that they can put better quality in less space. There isn’t anything inherently better about any of these systems, other than you can make a high quality picture take up less bandwidth, and that’s good in itself.

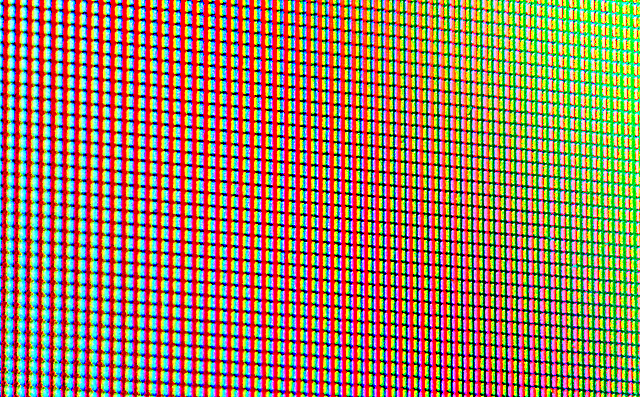

Resolution

The only real measure of HDTV is resolution. There are two basic standards for HDTV, one at 1280×720 pixels and one at 1920×1080 pixels. This was done initially because there were people who were worried that the 1920×1080 equipment was going to be too expensive. There are other technical details like frame rate and interlacing, but that’s not really the point here. The point is that if you’re looking for HD, really you’re looking for a resolution between 720 and 1080 vertical lines of picture.

Other measures of HD

Traditionally, HD uses a 16:9 widescreen picture while SD uses a 4:3 picture. However, you can have HD that’s 4:3, like when old TV shows are presented in HD. This is possible when the show was shot on film and the film is rescanned.

There’s also bitrate, which I’ll get into a little later. Bitrate is part of the discussion of the overall quality, but since different compression schemes result in different size files, it’s not as helpful as you would think.

What about 4K?

4K brings another whole level to the discussion. Traditionally, 4K video has a widescreen picture with a resolution of 3840×2160, exactly 4 times as many pixels as HD. We tend to say that a 4K image is one actually produced in 4K. That’s compared to a picture produced in HD or SD and just shown on a 4K TV. Yet, the technology used for showing HD on a 4K TV is so good that it’s often hard to tell the difference.

The big differentiator with 4K is dynamic range. Dynamic range refers to the difference between the brightest and darkest parts of a picture, and it’s really what makes an HD or 4K picture sparkle. The dynamic range of HD is much better than SD, which is why SD sort of looks like looking out a dirty window. With 4K, a properly shot image can look stunning. We call HD pictures “SDR,” or “Standard Dynamic Range,” while 4K TVs are capable of even more range. “HDR” or “High Dynamic Range” refers to quality that’s even greater than what you see with HD.

There are several kinds of HDR, each even better than the others. Right now the arguable top of the heap is Dolby Vision, which not only gives the best possible selection of colors and shades but also adapts to the specific TV you’re watching for a super duper experience.

Why does some HD look worse than others?

Netflix HD, especially in its early days, was really poor. The digital download versions of Star Wars pale in comparison to Blu-ray disc. Yet both are HD. That’s because there’s a lot more to HD than just vertical lines. Even though vertical lines are enough to call a picture HD, that doesn’t mean it’s visually pleasing.

An HD picture looks good or bad, depending on its compression. The goal is to set it up to fit in a certain size data stream. If the content provider overcompresses the picture, it can look blurry, weak, or choppy. There can be all sorts of extra noise around images. This is less of a concern now than in the past. Why? Because people have a lot more streaming speed than they did before. Yet it still ends up being a problem. I watched Avengers: Age of Ultron on FXNOW recently, though. Truth is, the whole thing looked like they were showing it through a gauzy curtain. Yet it was, at least by the numbers, HD.

What can you do about all of this?

The real truth is no one is really going to go back and reclassify different kinds of HD, but at least in the 4K world we’re starting to see some new standards that make sense. There is only one 4K/UHD resolution: 3840×2160. But then there are all the different audio formats, which I haven’t even touched on, and the different levels of dynamic range. So, all HD is not the same, and at least today all 4K isn’t the same either. At this point, you get what you get.

Solid Signal doesn’t sell TVs…

…but we do sell all sorts of things to make the TV experience better. Shop our great selection or call us at 888-233-7563 for the best advice! We’re here during East Coast business hours. If it’s after hours, fill out the form below and we’ll get right back to you!